https://ko.wikipedia.org/wiki/%EC%84%A0%ED%98%95_%ED%9A%8C%EA%B7%80

선형 회귀 - 위키백과, 우리 모두의 백과사전

위키백과, 우리 모두의 백과사전. 독립변수 1개와 종속변수 1개를 가진 선형 회귀의 예 통계학에서 선형 회귀(線型回歸, 영어: linear regression)는 종속 변수 y와 한 개 이상의 독립 변수 (또는 설명

ko.wikipedia.org

선형회기는 수치 데이터를 기반으로 " 예상되는 값이 00이다 " 라는 질문에 답하기 위한 영역이다.

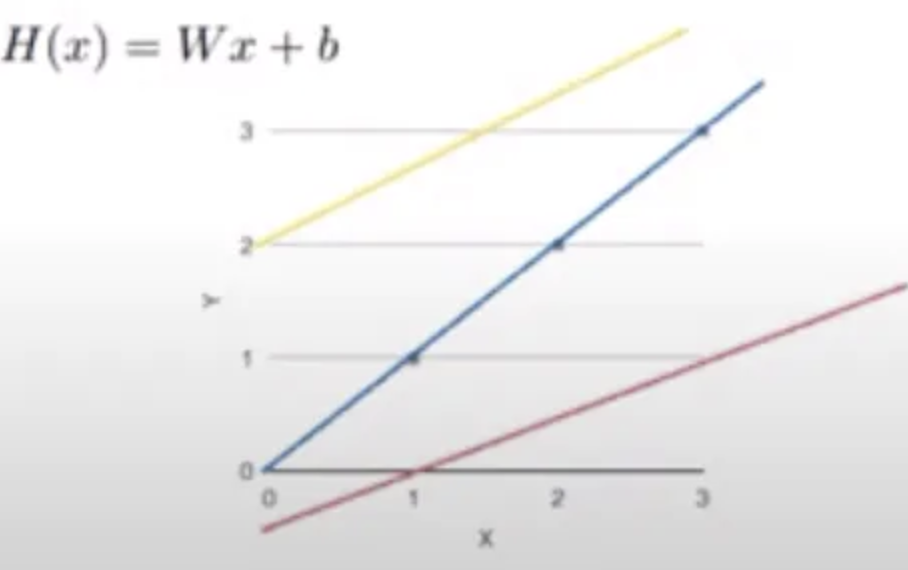

선형회기에서는 예상값(추정되는 값)이 선형일 것이라고 가정한다.

예측가능한 함수를 만들 때 선형이라면,

어떤 선형의 예측가능한 함수가

목적에 걸맞는 경향성을 나타내는지를 찾는 것이다.

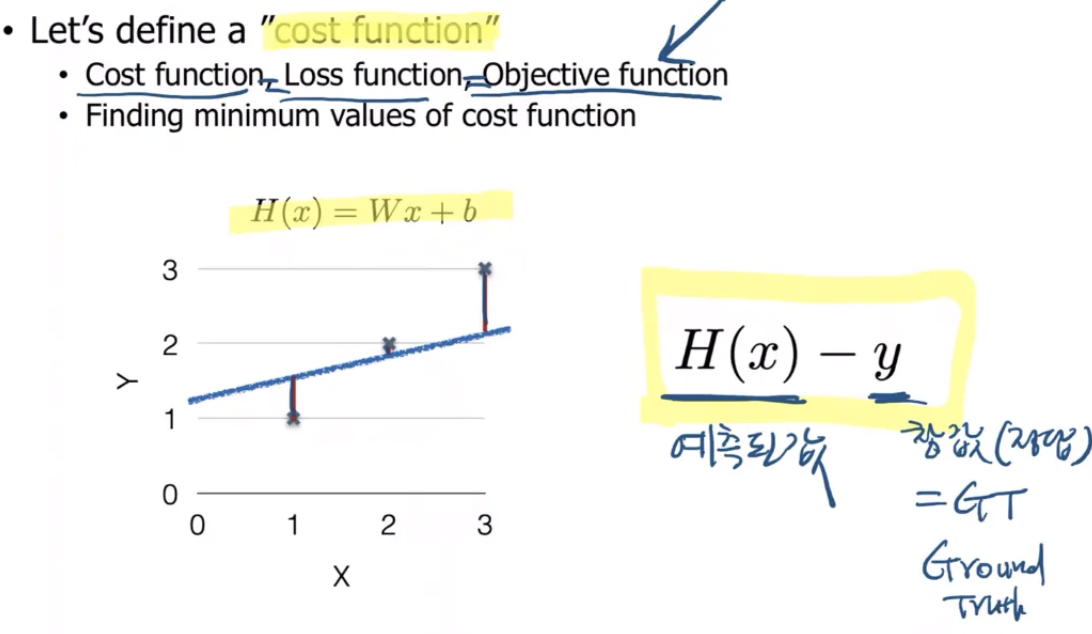

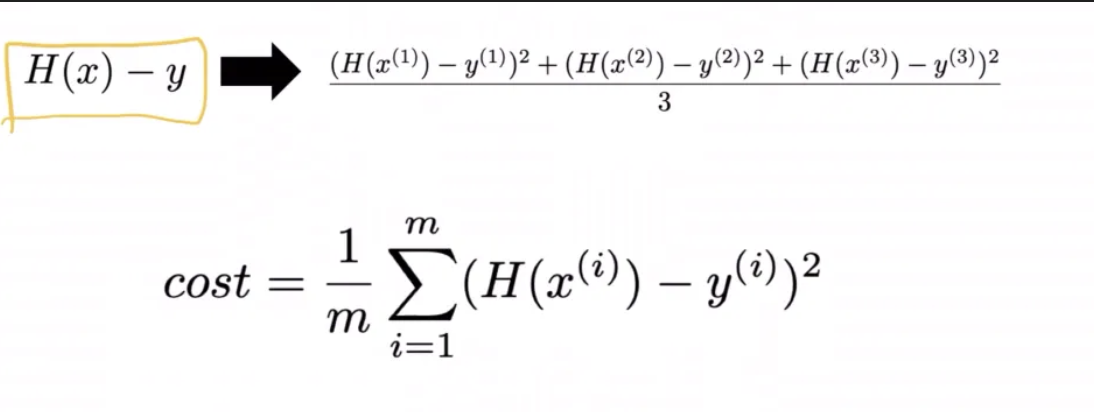

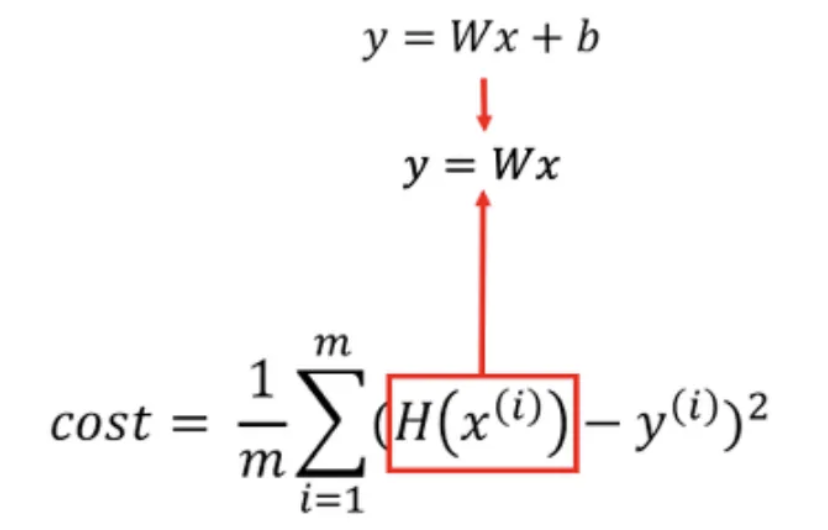

이때 경향성을 찾기 위해 Cost Function , 즉 오차가 적은 방정식(경향성)을 선정한다.

예측 된 값 H(x) 에서 y 실제 값을 뺀다. 그리고 그것을 제곱하여 "오차의 정도" 스칼라로 치환하고 평균을 낸다.

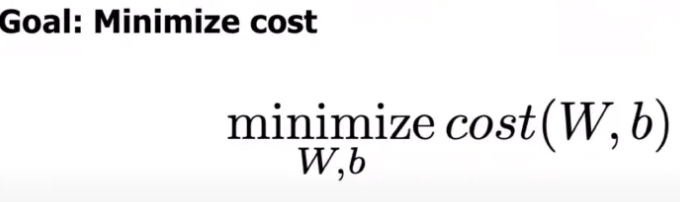

그래서 결국 선형회귀의 목적은

즉 예측값이 오차함수를 작게만드는 방향으로 "선정"하게 하는 것이다.

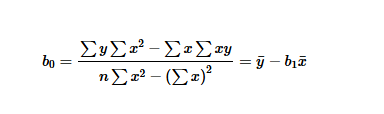

선형회귀의 목표는 Wx+b 의 선형함수를 구하는것이다. w의 값은 아래의 식과 동일한데 여기서 b0 가 w이다.

에라이, 일단 레츠 두 잇, 회귀분석 - OLS Regression 손으로 풀어보기

자, 이제까지 회귀에 대해서 계속 말만 꺼냈지, 실제로 회귀를 해보지 않았으니까, 서툴더라도 회귀라는 것을 한번 해보자고요. - 분석 결과의 해석은 이걸 해 보고 하는 것으로... 아직은 회귀에

recipesds.tistory.com

이는 cor(x,y)/var(x) 꼴인데, x의 분산 변화량에 대한 x,y 의 공분산 변화량의 경향성을 나타낸다.

아래는 구현에대한 코드이다.

import pandas as pd

import numpy as np

'''

reference : 간단한 OLS 구현 코드입니다.

Cor(x,y)/Var(x) 의 형태로 선형회귀의 목적인 ax+b 함수를 추정한다.

'''

x = [2,5,8,6]

y = [80,62,80,99]

mx = np.mean(x)

my = np.mean(y)

### OLS(Ordinary Least Square)

###

###

divisor = sum([(i-mx)**2 for i in x])

def top(x,mx,y,my):

d = 0

for i in range(len(x)):

d += (x[i] - mx)*(y[i]-my)

return d

dividend = top(x,mx,y,my)

a = dividend / divisor

b = my - (mx*a)

print(f"기울기 = {a}, y절편 = {b}")

결과값은 ax+b 를 유추해낼 수 있는 기울기와 절편이나온다. 그렇다면 우리는 데이터로 경향성을 나타내는 직선의 함수를 구할 수 있게 된다.

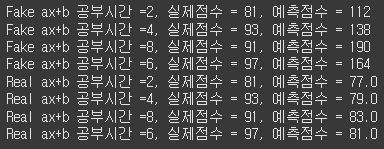

그렇다면 이것으로 예측을 한다면 아래와 같다.

import numpy as np

'''

reference : linear regression 을 위한 ax+b 함수 구현과 예측 코드입니다.

'''

fake_a = 13

fake_b = 86

x = np.array([2,4,8,6])

y = np.array([81,93,91,97])

def predict(x):

return fake_a * x + fake_b

def real_predict(x):

return a * x + b

predict_result = []

for i in range(len(x)): ### 임의의 값으로 ax+b 를 만들어 예측함.

predict_result.append(predict(x[i]))

print(f"Fake ax+b 공부시간 ={x[i]}, 실제점수 = {y[i]}, 예측점수 = {predict_result[i]}")

predict_result = []

for i in range(len(x)): ### 데이터 기반 cor(x,y)/var(x) x + b 를 만들어 예측함.

predict_result.append(real_predict(x[i]))

print(f"Real ax+b 공부시간 ={x[i]}, 실제점수 = {y[i]}, 예측점수 = {predict_result[i]}")

위는 최소제곱법(OLS) 를 사용하여 함수를 ax+b 의 함수를 추정한 것이다. 아래는 경사하강법(Gradient Decsent )로 선형회귀식을 구현해보겠다.

'''

reference : linear regression 에 활용되는 함수 추정 구현입니다.

'''

a = 0

b= 0

lr = 0.03

x = np.array([2,4,8,6]) #임의의 데이터 설정

y = np.array([81,93,91,97])

epochs = 2001 # 데이터 반복적 학습 횟수

n = len(x)

for i in range(epochs):

y_pred = a*x + b # 일차 방정식

error = y - y_pred # 오차값 계산 ( 실제 y 와 예측한 y 의 차이값 = error )

## 편미분

a_diff = -(2/n) * sum(x*error)

b_diff = -(2/n) * sum(error)

a = a - lr * a_diff #계수업데이트

b = b - lr * b_diff

if i % 100 == 0:

print(f"epoch = {i}, 기울기 = {a}, y절편 = {b}")

y_pred = a*x+b

plt.scatter(x,y)

plt.plot(x, y_pred,'r')

plt.show()

결론 : 선형회귀는 "값을 예측" 한다. 예측값과 실제 값의 차이를 줄이는 방향으로 "잘 예측"하는 경향성을 나타낸다. 방식으로는 OLS 와 경사하강법을 사용할 수있다.

'Dev,AI' 카테고리의 다른 글

| [python] FastAPI framework 다른 프레임워크와 비교 중심 (4) | 2024.11.22 |

|---|---|

| AI_basic ) 역전파(BackPropagation) (1) | 2024.11.06 |

| 오프라인 환경에서 Git 사용 방법: SSH로 원격 저장소 접근하기 (0) | 2024.11.05 |

| [ Python ] dataclass 자세히 알아보기 (0) | 2024.11.03 |

| C#) Grbage Collector (1) | 2024.04.22 |